AI-powered Cyber tabletop exercise tool - Research, design & MVP

I designed and prototyped an AI-powered cybersecurity simulation platform for enterprise teams, reducing the time and cost of running tabletop exercises by automating scenario generation, facilitation, and evaluation.

🤘 Outcome

What used to take facilitators weeks to prepare and cost companies tens of thousands of dollars can now start in 2 minutes. Exercises are fully customizable, can run for minutes to hours, and evaluations are instant, saving both time and money. So far, this is the most resoursful training available.

✅

~99% Faster Setup ( not kidding )

✅

Instant Evaluation

What used to take hours or even days to complete can now be done instantly, removing one of the biggest pain points in traditional tabletop exercises.

✅

Higher Engagement

Adaptive narratives, industry-relevant artifacts, and dynamic responses replace boring slides with realistic, interactive scenarios.

✅

Lower Cost

No facilitator needed and shorter exercises save time, money, and reduce the regulatory burden on CSIRTs and management teams.

ℹ️ Who is TryHackMe

TryHackMe is a leading online cybersecurity training platform, used by individuals, academic institutions, and corporate security teams. Over 6 million users and 1,000+ enterprise teams rely on it, some of whom I got to interview for insights on TTX challenges.

🎲 What is a Tabletop Exercise

Tabletop Exercise (TTX) is a simulated cyber incident exercise where teams respond to realistic threats in a safe environment. Think “cyber crisis rehearsal,” minus the panic of a real breach.

❗Problem

Running cyber exercises is costly and time-consuming, they often miss the chance to test and improve their team’s response. As a result many companies aren’t ready for cyber attacks and end up taking bigger hits than necessary.

😫 "We would run more exercises if we had the resources. Without them, we stay underprepared…".

👑 My Role

I led user research, defined key problems, designed and built an AI-powered MVP, and iterated on it through feedback to deliver production-ready designs.

⚙️ Methods used

My process here may look neat and linear, but it was more of a tangle and a loop in real life.

🔎

Research

Desk study

Surveys

Competitors' solutions review

Interviews (users, clients & SMEs)

Contextual inquiry

🎯

Define

Affinity diagram

Personas

⚙️

Ideate

Task flows

Sketch

HMW workshop

🎨

Iterative design

Built MVP with an AI app builder

Concept testing & iteration

Low & high-fi designs

UI audit on production

🎯 Research Goals

We kicked things off with desk and internal research, reading studies, blogs, watching videos, checking out conference talks, and spending a lot of time on Reddit (soon Reddit became my best buddy).

We were lucky to have some of our cybersecurity experts who helped us make sense of TTX: what it actually is, who’s involved, what challenges they face, and the language they use. That helped us figure out where to focus our research.

Companies: Understand why companies often neglect tabletop exercises.

Research Methods: Survey, Client interviews, Contextual enquiries

Competitors: Understand available solutions and their pros and cons.

Research Methods: Competitive analysis, Client interviews

Participants: Understand the pain points of participants and facilitators in tabletop exercises.

Research Methods: User interview, Survey, Contextual enquiries

🤔 Hypothesis

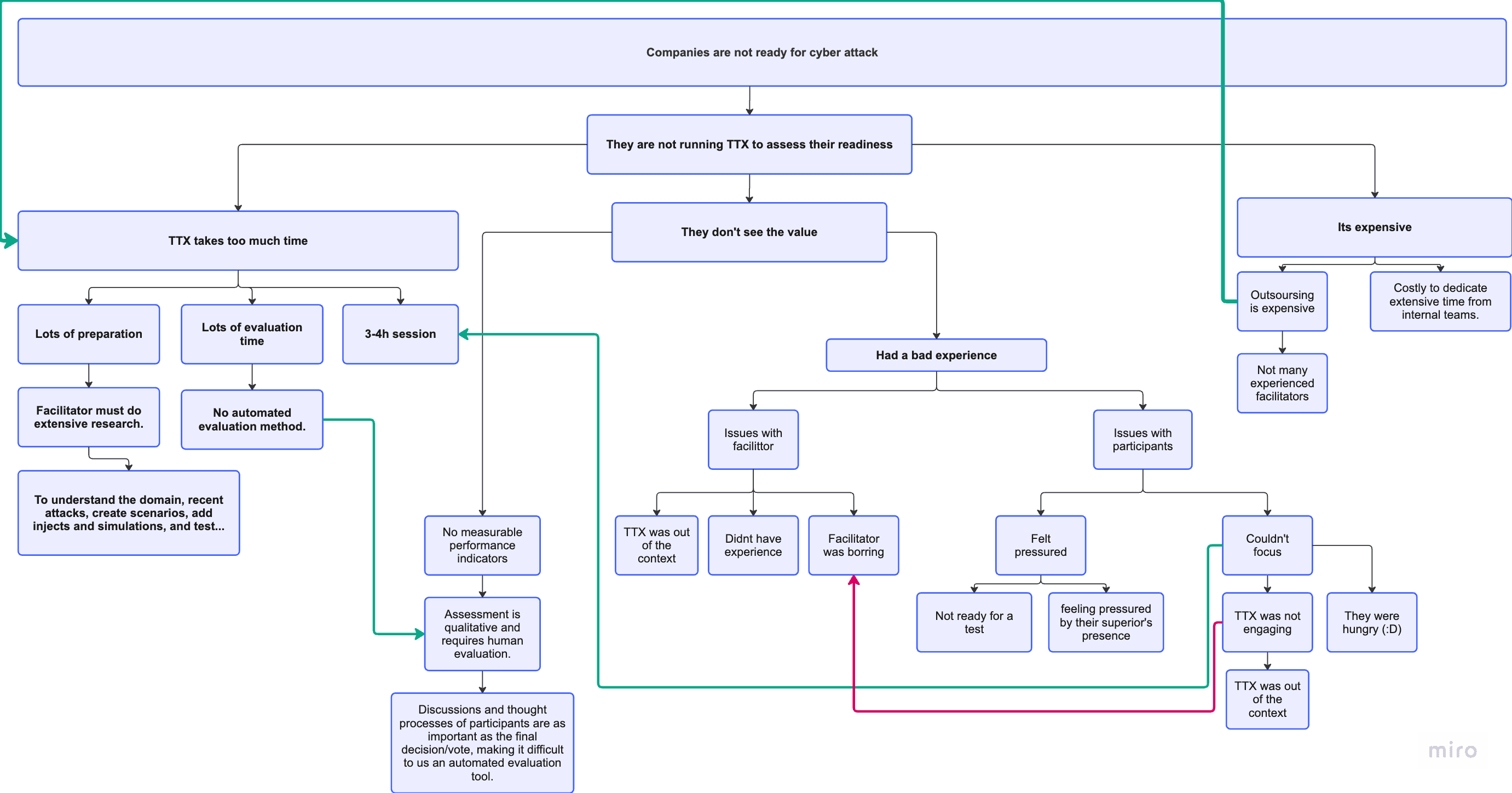

The desk and internal research gave us a solid starting point, helped identify what we still needed to learn, and allowed us to form our initial assumptions about the problem. We then created a hypothesis tree to guide our research and prioritized the key areas to focus on.

Hypothesis creation process

Screenshot from the hypothesis creation process.

TTXs are seen as expensive because they take a lot of time and human resource, so companies don’t run them as often as recommended.

Hypothesis 1

TTXs depend heavily on the moderator’s skill, which makes them stressful and hard to manage.

Hypothesis 2

It’s hard to measure how well people do in TTXs, so companies struggle to show clear results and thus see value.

Hypothesis 3

Some companies don’t think they’ll be attacked, so they don’t see TTXs as worth the time or cost.

Hypothesis 4

❓Survey

Even though we knew upfront that we wouldn’t have enough participants for super statistically significant data, given how niche our market was, we still ran the surveys to get ideas and figure out where to focus next. They also helped us collect contact info for follow-up interviews. We ran two surveys:

One for our clients about their challenges and opinions on TTX (thanks to our Customer Success team for helping share it).

One for TTX participants, shared through our Discord channel.

Some screenshots from the survey results

💬 User & Client interview Affinity diagram

We started with broad questions about the purpose and goals of TTX, then moved into specific challenges and pain points. We didn’t stop interviewing, even while sketching ideas, each round helped us test or adjust what we were thinking.

Recruiting TTX users or facilitators was tough, there just aren’t that many companies running TTXs, which is part of the problem we’re trying to solve. Luckily, our customer success team was amazing. They helped us bring in participants, including some tech giants.

In total, we had 10 interviews. These were remote, semi-structured interviews, each lasting 40 minutes to an hour. Some patterns started to emerge as early as the second interview.

⚖️ Competitive review

This was one of those steps in our process that we never fully started or finished—it was ongoing while we were coming up with the idea, researching, defining, and designing the solution.

Our competitors kept their products pretty hidden. We had to dig through Vimeo and YouTube to find anything, and some videos had fewer than 10 views (mostly from our team, I bet). We also read Reddit threads, joined Discord discussions, and tested the most commonly mentioned formats ourselves—like the Dungeons & Dragons-style TTX.

Ironically, our biggest competition is PowerPoint. But if everyone agrees slide decks are boring, why are they still the default? It’s not clear why others haven’t nailed it.

After identifying the key needs and pain points, we took another look at competitor products. This time, instead of doing my usual SWOT or feature comparison, I focused on how each product actually solves the problems we uncovered.

🔍 Interview & Contextual Inquiry Findings

Since presentation-style TTX exercises are the most common, we decided to go through one ourselves, from start to finish, to understand how they work and where things break down.

We brought in the MWR team to plan and run it. The full process had seven steps: initial call, onboarding doc, slide creation, artifacts, CSIRT TTX, CMT TTX, and the evaluation debrief. This wasn’t an easy decision, since it was quite expensive to hire the team and dedicate our tech team and executives for two full days, four-hour sessions each.

We sat in on all seven calls (4–7 hours each), asking questions and watching them build the materials live. It gave us a real feel for the work facilitators do.

It was a big effort, but the hands-on experience was worth it. Afterward, I mapped out all the steps and challenges we faced along the way.

👽 Personas

When we began our research, we started with persona hypotheses and assumptions. After the interviews, we updated the personas based on what we learned, and this is what we created.

CSIRT TTX participant: Handles the technical side of the cyber crisis, like SOC simulations, forensics, and IT systems.

Crisis Management TTX participant: Focuses on fast decision-making and managing the response during the crisis.

Facilitator: Plans the TTX, builds the storyline, runs the session, keeps the team on track, and evaluates how it went.

TTX Customer: Decides whether to buy or approve the use of the TTX product.

CSIRT TTX PARTICIPANT

Responsibilities

Uses technical tools and simulations to detect, contain, and eradicate an attack

Refers to playbooks to ensure actions are aligned with procedures

Communicates with teammates to find solutions

Reports findings to higher management

Escalates to Crisis Management team

Keeps track of time to ensure tasks are completed within the deadline.

"If the scenario is not relevant to the organization, it's very easy to lose focus."

Goals and needs

Hands-on experience with simulations and relevant context.

Identify potential gaps in the organization’s incident response plans.

Improve coordination and communication with team members during incidents.

Test and improve the effectiveness of existing incident response plans and tools/

Problems

Shy and uncomfortable speaking in front of large teams.

Finds sessions that are too theoretical or slide-based to be unengaging and less useful.

Frustrated when TTX tools and simulations don’t accurately reflect real-world environments or when they malfunction.

🧠 "How Might We" workshop

We ran a 'How Might We' (HMW) workshop to brainstorm solutions and many of the ideas involved Artificial Intelligence, which our co-founder Ashu and the entire team embraced happily.

🔀 Task Flows

In the MVP, the AI performs three key functions:

Generates realistic tabletop‑exercise scenarios based on the organization’s playbooks, and examples given by our content engineers,

Facilitates the exercise discussion by guiding roles and injecting events,

Provides real‑time evaluation with lessons learned.

I spent a full day with Ashu (Co‑founder), Marta (Head of Content Engineering), and Dan (Product Manager) aligning on this vision. The chart below shows the result of our discussion.

✍🏻 Sketching

Sketching is my favorite part. It doesn’t even feel like work. I sketch ideas everywhere: on the bus, train, plane, even while waiting at the doctor’s office. The tricky part is I end up with so many sketches that it’s hard to decide what to share with the team and what to keep in the vault.

Here are some examples I sketched for the CSIRT TTX exercises:

💨 MVP with Bolt.new AI App builder.

Built an MVP with Bolt.new using the GPT-4 API for scenario generation.

Created real-time chat flow logic with basic JS and Python scripts, with help from my prompt engineer teammates.

Integrated mock data for scenario evaluation in collaboration with our amazing prompt engineers.

It took some time to get the multiplayer chat working, big thanks to our software engineers for that. As you can see, this project was truly a team effort.

An MVP created using Bolt.new

🧪 Concept testing

With help from our CE team, we brought in another round of clients to test our quick MVP and value prop. We ran 10 interviews with cyber teams from major companies across different maturity levels.

The big win: clients were excited, asking, “When can we use this?” and “We’d start as soon as it’s ready.”

The main concern: AI behavior, accuracy, and trust.

Positive feedback

They love the idea. Client #2 summed it up well: “It’s not something you couldn’t do on your own, it’s something you don’t have time to do on your own.”

Running the TTX through a chat with AI got positive feedback. Some were cautious about accuracy, but as client #5 put it: “If you get the accuracy right and AI doesn’t hallucinate, I’m down for it and ready to use tomorrow.”

The flow for creating scenarios, the questions, and the time-steps all got

great feedback.

The tools / network diagram gets a lot of ”wow”s :D ! even though a few suggested extra tools.

The evaluation also gets a lot of positive feedback. Client #3 said “It would take us a long time to create this numbers at this level, this is very useful. “ and comments like this we heard from other clients as well.

Surprisingly, some clients said they’d be open to uploading their playbooks (after removing sensitive info) to create more custom scenarios.

Negative feedback

If every exercise ends in escalation, it might feel like the technical team always fails, which could be discouraging.

The product is very different from what's commonly used (like PowerPoint), which could make adoption and trust harder.

AI hallucinations and lack of trust in AI are real concerns. Client #8 said “At some point I am going to be nervous about what is this Ai going to spit out to my team members, employees, I am nervous how is AI going to engage them, what is going to demand of them”

Using AI in cybersecurity training might worry customers due to security risks. 2 clients said they can’t use AI tools for security reasons, but one said, “We don’t use anything that doesn’t have AI.”

The rationale behind the company health metrics, and evaluation criteria how transparent it will be.

Writing may be too much for some participants, one suggested voice-to-text-to-voice.

🎨 Final screens

Based on the feedback from the concept testing, I did a ton of tweaking when designing Release 1. The main two edits are below:

For the first release, we’re starting with multiple-choice questions from AI instead of open-ended ones, easier to manage and reduces the risk of hallucinations.

We’ll offer up to five scenarios, created by our content engineers, so the TTX initiator can choose one before using AI to generate new ones.

Some flows needed low-fidelity designs first, but for parts like TTX generation, I went straight to high-fidelity. It’s early, so there’ll be plenty of tweaks and feedback. Having a component library helped speed things up.

Scenario Selection: Users can either choose from pre-made scenarios or generate a new one.

Scenario Generation: To generate a custom scenario, the TTX initiator answers a few questions, which the AI uses to create it.

Lobby Page: Participants can review the scenario, roles, and structure of the exercise. They can also invite others and assign roles.

Participant Invitation: When inviting participants, the initiator can assign roles to each person.

Exercise Page: Users track their progress, pick answers, view participants, and check who has voted. The left side shows the evolving narrative with artifacts.

Vote results & instant feedback: Once everyone votes, they see how their choices affected the exercise in real time.

Evaluation Generation Page: Shows the evaluation process as it's being built.

Evaluation Page: Offers both team and individual assessments, with a summary and a detailed section-by-section breakdown. The “lessons learned” section is one of the most important parts.

Live Product

✍🏻 Some reviews

📝 Lessons learned

This project was complex, with multiple personas, each having different pain points, and quite a few risks. Still, we had great collaboration with content engineers, SMEs, clients, top management, and customer success. That said, there were a few things we could have prevented, like these:

Interviewing users with strong client bias limited the diversity of insights.

Having Ashu, our co-founder on some calls might have introduced bias, as users may have felt less comfortable sharing honest feedback.

We didn’t assess AI and technical feasibility early, which led to avoidable iterations.

Aligning product design with content engineering, dev and LLM teams sooner would have streamlined the process.

💬 Feedback From My Supervisor

I had the pleasure of working with Tsov on a particularly complex and ambitious project at TryHackMe. She led the end-to-end discovery, user research, and prototyping for a major new product initiative, taking on a scope that spanned well beyond core design work.

Tsov conducted user interviews, researched adjacent products, and gathered insights to shape our strategy. She wore multiple hats — not just as a designer building wireframes, mockups, and clickable prototypes, but also stepping into a product management role to scope features, manage stakeholders, and drive the project forward. She even used AI tools like Bolt to build functional MVPs we could test with real users.

Throughout the project, Tsov was highly self-organized, adaptable, and proactive. She worked independently where needed but was also a strong collaborator across our team. Despite the complexity and steep cybersecurity learning curve, she handled challenges with creativity, initiative, and a positive attitude.

I’d happily recommend Tsov to any team looking for a talented, flexible designer who can take ownership, communicate clearly, and deliver high-quality work from concept through to launch.